DFM4SfM (Paper@ICIP2023)

DFM4SfM

Dense Feature Matching for Structure from Motion

ICIP'2023

Simon Seibt1, Bartosz von Rymon Lipinski1, Thomas Chang1 and Marc Erich Latoschik2

2 Human-Computer Interaction Group, University of Wuerzburgy

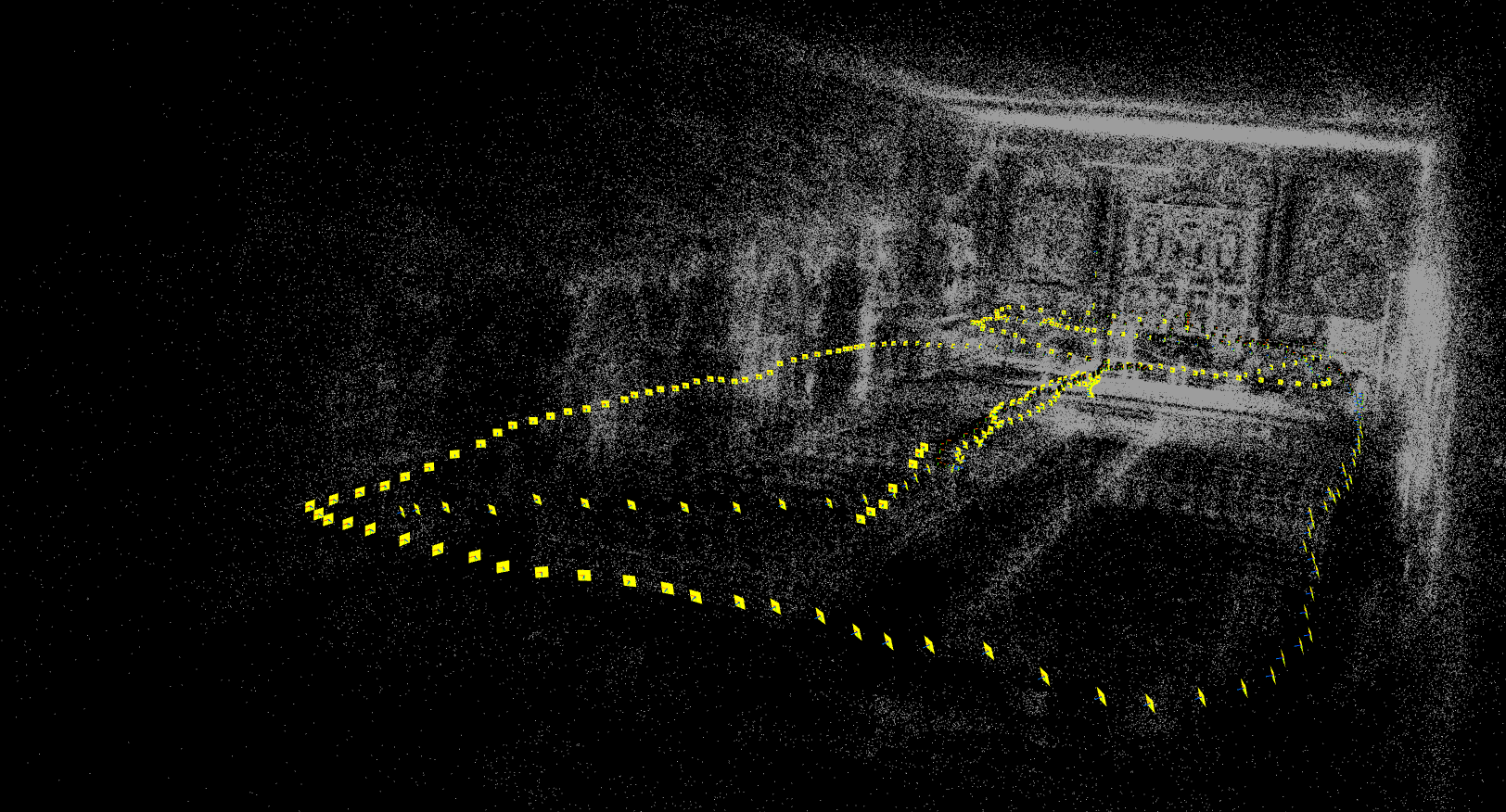

DFM4SfM result of the scene "Church" (Tanks and Temples dataset [1])

Abstract

Structure from motion (SfM) is a fundamental task in computer vision and allows recovering the 3D structure of a stationary scene from an image set. Finding robust and accurate feature matches plays a crucial role in the early stages of SfM. So in this work, we propose a novel method for computing image correspondences based on dense feature matching (DFM) using homographic decomposition: The underlying pipeline provides refinement of existing matches through iterative rematching, detection of occlusions and extrapolation of additional matches in critical image areas between image pairs. Our main contributions are improvements of DFM specifically for SfM, resulting in global refinement and global extrapolation of image correspondences between related views. Furthermore, we propose an iterative version of the Delaunay-triangulation-based outlier detection algorithm for robust processing of repeated image patterns. Through experiments, we demonstrate that the proposed method significantly improves the reconstruction accuracy.

Paper

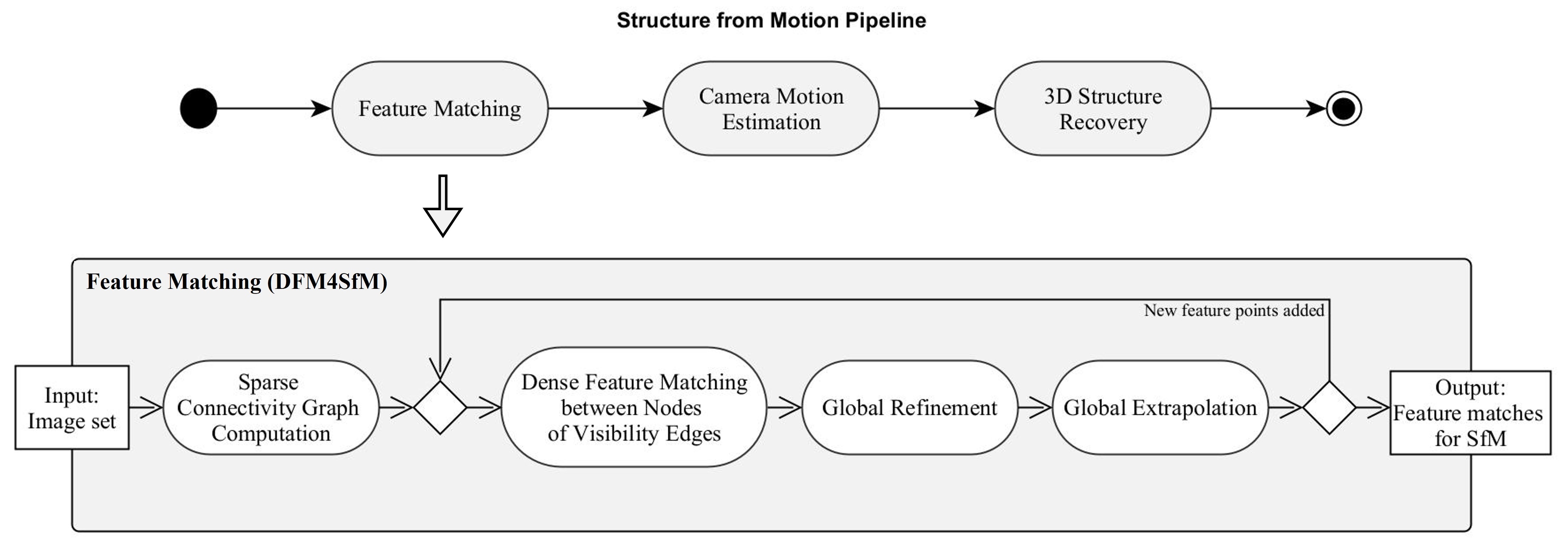

Pipeline Overview

UML activity diagram of the DFM4SfM pipeline, integrated into a SfM pipeline

Dense Feature Matching based on Homographic Decomposition

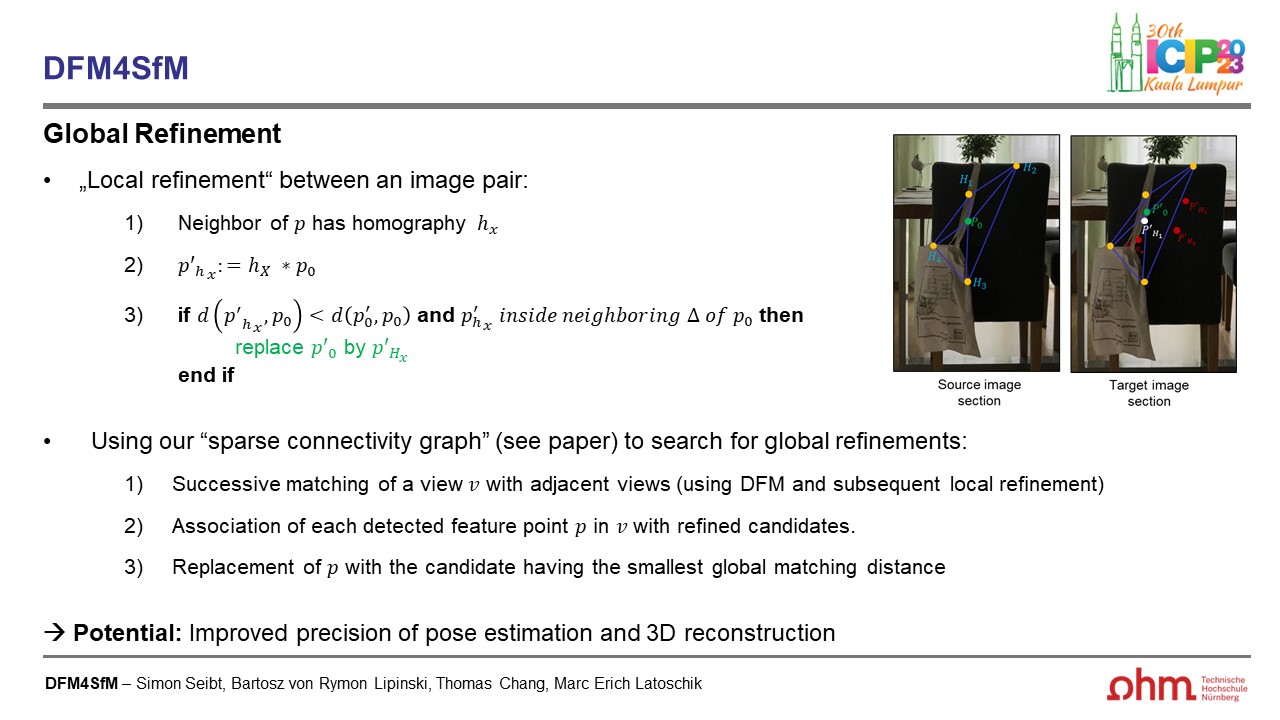

Global Refinement: Extension of positional refinement to a multi-view approach

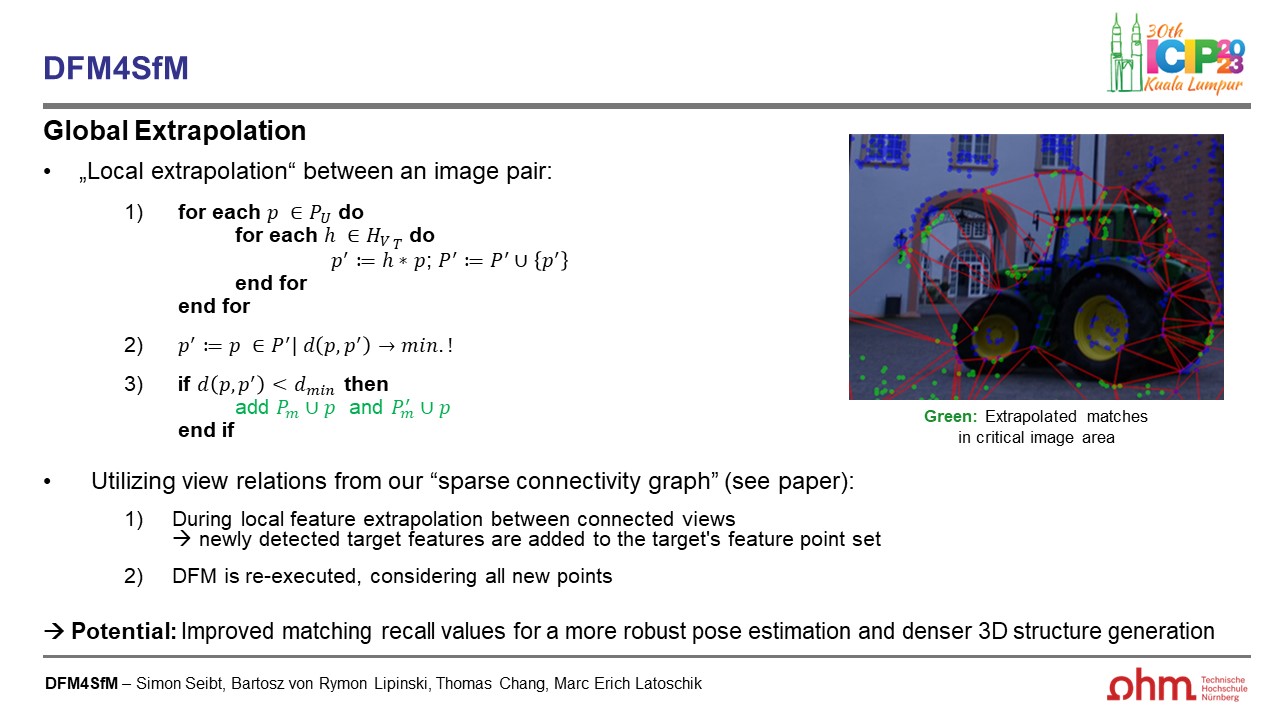

Global Extrapolation: Mutual extrapolation of feature matches by considering multiple adjacent views

Visual Results

Scene: Castle (DMVS dataset [4])

Scene: Facade (ETH3D dataset [5])

Scene: Terrains (ETH3D dataset [5])

Scene: Courtyard (ETH3D dataset [5])

BibTeX

@inproceedings{Seibt2023DFM4SfM,

author = {Seibt, Simon and Von Rymon Lipinski, Bartosz and Chang, Thomas and Latoschik, Marc Erich},

title = {DFM4SfM - Dense Feature Matching for Structure from Motion},

booktitle = {IEEE International Conference on Image Processing Workshops},

year = {2023},

}

References

| [1] | A. Knapitsch, J. Park, Q.-Y. Zhou, and V. Koltun, “Tanks and temples: Benchmarking large-scale scene reconstruction,” ACM Trans. on Graph., 2017. |

| [2] | J. L. Schoenberger and J.-M. Frahm, “Structure-from-motion revisited,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2016. |

| [3] | P. Moulon, P. Monasse, R. Perrot, and R. Marlet, “Open-MVG: Open multiple view geometry,” in Int. Workshop on Reprod. Res. in Patt. Recogn., 2016, pp. 60–74. |

| [4] | C. Strecha, W. von Hansen, L. Van Gool, P. Fua, and U. Thoennessen, “On benchmarking camera calibration and multi-view stereo for high resolution imagery,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2008. |

| [5] | T. Schöps, T. Sattler, and M. Pollefeys, “BAD SLAM: Bundle adjusted direct,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2019. |